A/B Testing

About

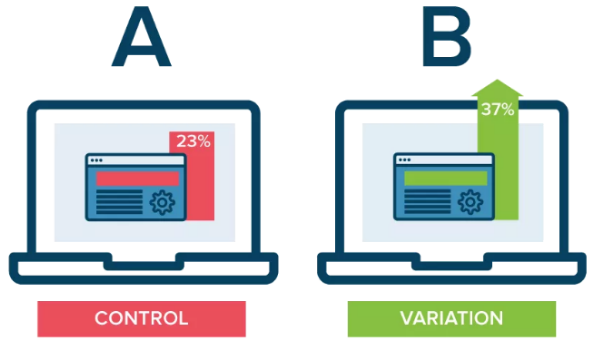

A/B testing is a form of quantitative user research, in which a control design is compared to a variety of slightly altered designs, to see which design is most effective in reaching the test goal.

Tests can be performed on most quantifiable metrics your site, including content, emails, and web forms.

A/B testing is also known as split testing, A/B/n testing, bucket testing, and split-run testing.

To carry out A/B testing you should know the current data for the goal you are trying to improve, the target data you hope to achieve, and a test hypothesis. The hypothesis is defined as the proposed design solution and expected outcome.

During tests some visitors are served the control – the current design, design A – and others get a design variation, design B. After a period of testing, the design that performs better for a specific metric is the winner of that test. The more successful design can then either be tested against another variation, or if the hypothesis has been realised, the test can be ended.

Methodology

- Planning – study website analytics, identify issues, decide your goal and construct your hypothesis

- Create design variations

- Run the experiment

- Review the data

- Decide whether to stick with the control, implement the design variation, or run further tests

(Optimizely.com 2017)

Benefits

- Continuous testing is possible on almost any actionable element on a webpage

- There are fewer risks associated with smaller, incremental changes

- Quicker and cheaper to implement than most other methods of research

- Ease of Analysis – it’s easy to determine the test “winner” based on defined metrics

Limitations

- Limited to testing changes to one single element at a time

- Limited to testing for one single goal or KPI (key performance indicator) at a time

- Incremental process of repeated iterations, with minor design alterations, can be time consuming

- Tests must reach sample size of statistical significance to ensure confidence in result – changes in a small sample size may occur by chance

- Improved results can come at the expense of other aspects of that design – ie. user satisfaction

- Intangible improvements, such as brand reputation can not be measured

- Testing only possible on fully implemented designs

(Nielsen J. 2005)

Case study

Removing Promo-code Boxes from Checkout Page Increased Total Revenue by 24.7%

https://vwo.com/blog/promo-code-box-ecommerce-website-bleeding-dollars-ab-test/

4.5.1 Introduction

In this case study, A/B testing software company, Visual Web Optimizer, review how marketing agency, Sq1 used A/B testing to increase sales for sportswear manufacturer, Bionic Gloves.

The A/B test hypothesis was, “Removing the Special Offer and Gift Card code boxes, from the shopping cart page, would result in more sales and less cart abandonment”

4.5.2. Critical Analysis

In this A/B test the hypothesis may have been too strict, it created a binary situation where there were only two states possible – a checkout page with the Special Offer and Gift Card code boxes included, or a checkout page without them.

While A/B testing is ideal for this type of test, no attempt was made to test other variations. Instead, one test was run for 48 days, revealing a sales increase of 24%, satisfying the hypothesis, and the test ended.

Following the hypothesis formulation method of “proposed solution” and “anticipated results”, a less strict hypothesis such as “Make changes to the Special Offer and Gift Card code boxes, to increase sales and reduce cart abandonment” would have allowed further tests, possibly revealing further improvements – such as increasing sales and reducing abandonment, while still retaining the Special Offer and Gift Card code boxes.